Jonathan Weiner DrPH, professor of Health Policy & Management and of Health Informatics and director of the ACG System R&D Team at the Johns Hopkins University Bloomberg School of Public Health, Baltimore, and a Predictive Modeling News Editorial Advisory Board member, reports that the team at The Johns Hopkins Center for Population Health IT has just published research – in the American Journal of Managed Care — on the impact of lab tests on predictive modeling. The takeaway points, (as featured in Predictive Modeling News August 2018), are these:

The article, “Assessing Markers From Ambulatory Laboratory Tests for Predicting High-Risk Patients,” is available here. Here are additional excerpts:

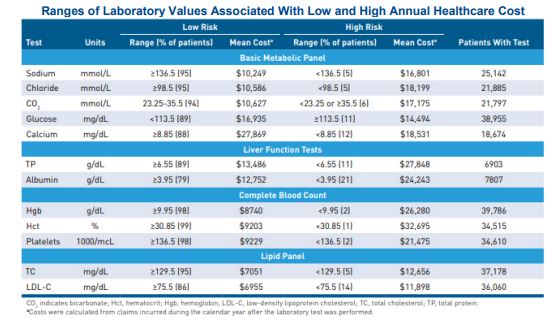

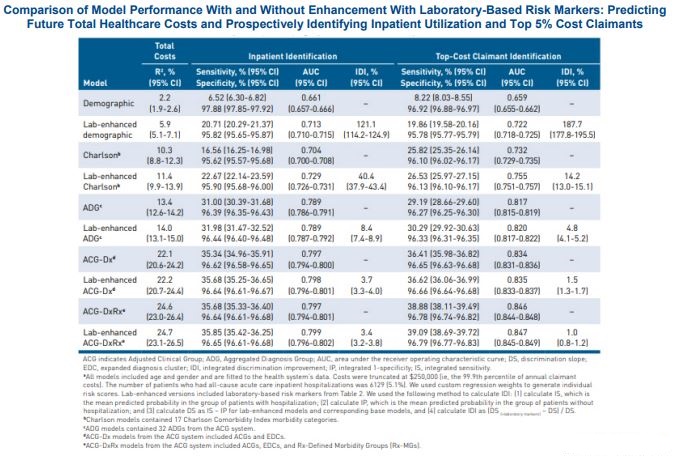

“Although claims remain an important source of risk data,” the article explains, “the widespread implementation of EHRs and other clinical information technology systems offers a new source of data on disease severity and health status, as most EHRs contain information not captured in claims, such as laboratory values, vital signs and clinical assessments.” Lab tests “can be powerful predictors among certain patient populations,” it adds, so researchers set out to “transform them into risk measures that could be useful when added to population-level predictive models.” Interestingly, the researchers add, “some tests that are commonly used to stage disease or guide treatment were not predictive of prospective cost in our analysis.”

The bottom line: “Organizations that apply strategies for high-risk case finding may want to consider adding laboratory-based risk markers to their models,” the article notes. “Those data may prove useful for a range of applications in the population health surveillance and care management domains.”

Predictive Modeling News talked to three of the paper’s authors at CPHIT — Klaus Lemke PhD, biostatistician; Kimberly Gudzune MD MPH, assistant professor of medicine; and Weiner — about adequate data and the impact of “missing” tests.

Klaus Lemke PhD, Kimberly Gudzune MD MPH & Jonathan Weiner DrPH: For established populations, where retrospective analyses are done using full claims or interoperable EHRs, the standard is comprehensive models using comprehensive risk adjusters like HCCs or ACGs. But for some standalone organizations — like ACOs or PBMs or doctor groups — or for health plans in some situations, such as when a patient is new to the system, their context is analogous to the more limited data availability model we included in our analysis. When calculating the ROI of including lab results data into a predictive modeling program, one needs to consider how easy it is to get these data and what else you might do with them if they are categorized in a cogent risk stratification system — as we did. For example, even if an organization is using advanced ICD PM models, and if lab data are easily accessible, then a lab stratification system such as ours would help to add cogent information for a care manger. In such a situation, then, for sure it will be worth adding lab information as we did for reasons beyond just the modest bump in a model’s predictive power.

KL, KG & JW: In our analysis, only 49% of individuals in our general enrolled population had results for at least one of the 23 common lab tests we examined during the study period. This lack of laboratory data for many patients may impact lab-oriented predictive modeling. While encouraging physicians to order these tests would improve data completeness, prompting them to order tests that may not be clinically warranted could also lead to increased costs with limited benefits for the population overall. Along these lines, we are currently exploring how “missing” lab tests — when they are, in fact, clinically indicated — may play a role in predictive modeling and care management decisions. Of course, the impact of clinicians ordering labs appropriately (and, for that matter, ordering drugs or assigning diagnoses) goes well beyond implications for improved predictive modeling or risk adjustment. But, yes, if lab-based analytics became more commonplace, lab ordering and the subsequent results might get more scrutiny and might factor even more strongly into care management decisions than they do today. Furthermore, lab classification frameworks like the one we developed will help clinicians and others cut through their information overload situation related to routine lab results data, just as other aspects of our ACG System help cut through the “noise” associated with the multiple diagnoses and medications that can have billions of unique combinations for each patient.

KL, KG & JW: Yes, exactly. For new enrollees or in organizations where comprehensive electronic data are not yet available, the first round of lab tests will provide one more piece of information to help assess the risk and needs of the patient.

KL, KG & JW: We have a few thoughts on this. First, within an organization, a model upgrade using previously untapped data sources should involve multiple parties, including the end users, the analytic team that will embed the model into workflows and data administrators. On the technical side, a fundamental requirement for making lab data useful for analytics is the need for standardization.

Most clinical labs send their results electronically directly from their lab assay computer systems to the provider. And data from multiple labs will need to be collated on an interoperable basis within the patient’s EHR. Like other EHR data, lab data in its raw form may or may not be standardized. The so called “LOINC” coding system is a leading standard for lab data exchange, but its use within EHR systems is not completely universal. We are pegging our future ACG development work re: labs to the LOINC standard.

KL, KG & JW: The US health care system is moving towards an era in which many new sources of data beyond claims will be available for predictive modeling and other analytics. Perhaps the most important new source is the EHR and other new clinical streams of data. Arguably, the lab results are one of the two or three most prevalent new sources of structured clinical data that we all need to figure out how to use beyond one-patient-at-a-time clinical care.

The researchers add: “The article is available online here. We at the Johns Hopkins Center for Population Health IT are the home of the JHU ACG System’s R&D . We are pleased that we have an active portfolio to develop state-of-the-art tools to ‘ingest’ many new types of data for analytics in support of improved population health. The next release of the ACG System (12.0) will include new approaches for using lab results across large populations to offer new classifiers for care managers and analysts that otherwise would not be feasible with just the raw lab data. Thank you for your interest. We invite readers to contact us if they have any further questions at lemke1@jhu.edu.”